A dance performance utilized with technology

I’m Evelien, 24 years old, and from Holland, the country of tulips and windmills. I came to Fukuoka for a research project at Kyushu University and will be staying here for another 5 months.

About 2 years ago I finished my master’s thesis, for which I made an interactive dance performance with the Kinect.

As I was asked to tell more about it, I’ll give here details on the process, the software I used, and the problems I ran into. I hope this can help people who would like to do a similar project.

The piece which I made for my graduation project was an interactive dance performance, where the dancer interacted in real-time with the visuals projected in a screen behind here. The progression of the piece was based on my own idea of adapting to challenging situations by embracing them, instead of resisting them. The music was pre-composed, and I controlled the progression of the visuals live by pressing different keys. In the video of the performance at the graduation show you can see me in front of the dancer, sitting with my MacBook (and that’s why the sign on my MacBook is covered, for if you were wondering.

Tideline performance at MMT grad show 2014 from Evelien Al on Vimeo.

While working on the project I had several workshops with the dancer to talk about the piece, and hear her ideas on it. This really helped me as it gave me the chance to adapt the music and visuals to her personal style, so the final performance became more complete. Also, it allowed me to foresee difficulties that might arise, and things I still had to think about such as the clothes the dancer would wear on-stage. The dancer I was working with had much more experience performing than I did, so she could tell me about these important facts.

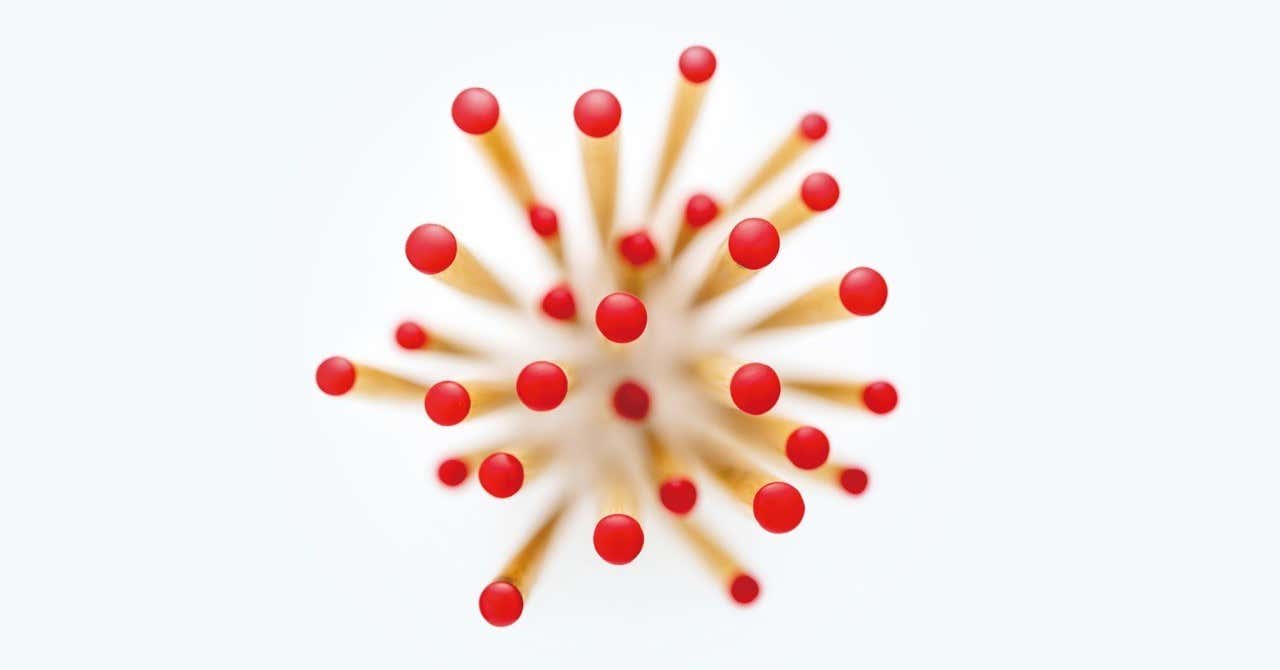

The music was pre-composed using Logic Pro. However, as I wanted the visuals to interact with the dancer in real-time, I had to use a motion capture device, in my case the Kinect. The Kinect can detect a person an its movements by projecting infrared dots on a surface, and detecting the pattern that’s returned via an infrared camera. With this mechanism it can detect depth, and separate different objects in an environment such as people. The Kinect can be connected to a computer via USB, and with a specialised driver you can decipher the incoming information, and use it in any programming environment you’d like. As I wanted to use my MacBook I chose to use the open source driver OpenNI.

The visuals were written in the programming environment Processing, which is a higher-level version of Java. Here you can very easily create visuals such as squares and lines. As indicated before I changed the visuals by pressing keys, linking different keys to the activation of different lines of code in my program.

My initial plan was to use joint detection, via which you can register for example the legs, feet, and the head, all separately. A result of this you might know is the Kinect stick figure:

However, I soon ran into problems with this. It turned out that some parts of the software I was using were updated, while others were not. Because of this my program kept crashing. The only way to get rid of the bug was to use an older version of Processing and skip joint detection.

Instead of that I used blob detection, which detects the outlines of a person and makes a blob out of it. Because of this I had to rewrite my program in less than a week, and I ended up using programs of Amnon Owed and adapting them to my piece. Even though the performance went really well in the end, I have never been as stressed as during that week!

Therefore I would recommend anyone who wants to work with the Kinect to use a Windows computer instead, and the official Kinect SDK software. It is much more robust as it has been kept updated. And if you’re using a projector, keep the quality in mind. The contrast might be much lower than on your computer screen, making visuals blurry and less visible.

All in all, I really enjoyed this project, as it was a mix of many things I love, and I learned loads of it. If you have some awesome ideas with the Kinect or Processing please try them out, and if you have any questions you can always contact me.